Using Screaming Frog to Keep a Monolithic Web Site Hygenic

I worked on an Adobe Experience Manager install for about two years. This particular company used AEM like a stock WordPress website with no plugins. We had custom components and policies on templates, but really only had one template that was a free-for-all, and utilized few of the “Experience” extras.

What struck me most about AEM, was that it lacks “site hygiene” tools. Things like link-checkers, mass spell-check, and full-text search just don’t exist.

But, we had a copy of Screaming Frog SEO Spider and it does all of those things.

Link Checking

Screaming Frog has “SEO” in it’s name, but it can do a lot more… Some memorable moments from those AEM days. Using SF:

- We found a number of pages that weren’t ready to be published, had been published.

- We found a lot of pages had been accidentally flagged “do not index” in the Page Properties (no wonder Google didn’t index them!)

- We had to find if we had disabled a bio page for a certain asshole who had been caught having a #metoo moment was enough (it wasn’t, we found three images and two blog posts and management told us to take them down.) I had a pretty lit SF configuration. I don’t have the actual config anymore, so I can’t share it, but I remember that I sent it to coworkers to set up their install of Screaming Frog and they loved it. Every time I found a new trick, I’d send it out and everyone would update and benefit.

Let me discuss a few of the things I configured (this is not an exhaustive list, but covers a few things I thought were interesting.)

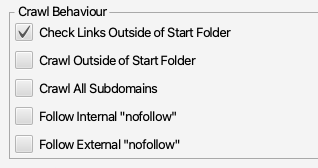

Limit the Scope One issue you can have is to turn Screaming Frog loose on your site and not limiting how many links it will check. Then, you end up spidering way more pages than you needed to. This is a waste of Screaming Frog, and your web server. And, the signal to noise ratio goes down. Even with 100 pages, you are going to be overwhelmed with information, so let’s set up some sanity limits.

Go to Configuration, then Spider. You want to modify the Crawl Behavior.

Be sure to check the links outside the Start Folder. However, you don’t necessarily want to crawl all of them. By only checking them, Screaming Frog will tell if you have a a 404 Not Found link from your site to an external link. But, it won’t actually crawl those links.

Heres’s a list of “Exclude” URLs (with domain removed). Essentially SF just ignores these because they were outside the scope of the pages I could control and those teams had other ways to handle link-checking.

https://www.------.com/events.*

https://www.------.com/login/.*

https://www.------.com/technology/about/.*

https://www.------.com/en/?

https://www.------.com/privacy.*

https://www.------.com/technology/contact/.*

https://www.------.com/technology/events/.*

https://www.------.com/technology/site-index.jsp

https://www.------.com/ngw/etc/designs/.*

https://www.------.com/en/ruxitagentjs_ICA2SVfqr_10137171222133618.js

https://www.------.com/it-glossary/

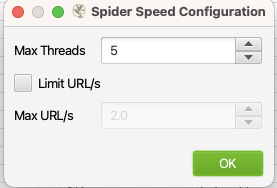

Screaming Frog can hit multiple pages at once, but if you want to go nice and easy, you can change the Configuration -> Speed and lower the Max Threads. Check the box and reduce the number of URLs a second (you can also enter a .5 to have it crawl 1 URL every 2 seconds). I would often run SF in the background while I worked, with 1 thread and .5 URL/s. My laptop didn’t notice it.

Custom Links

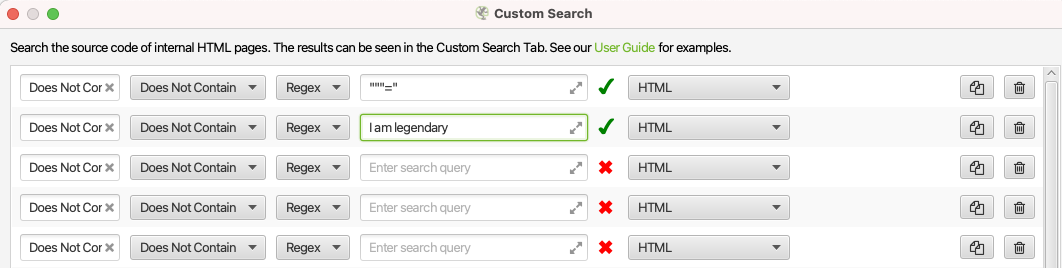

Say you want to check if any page has a specific word or phrase. Maybe you just started using a new slogan and want to scrub the old one. WordPress allows you to search for words and phrases in a post, but CMSes like Adobe Experience Manager, incredibly, do not handle this well. For AEM, Screaming Frog allowed us to find these types of things using their Custom Search.

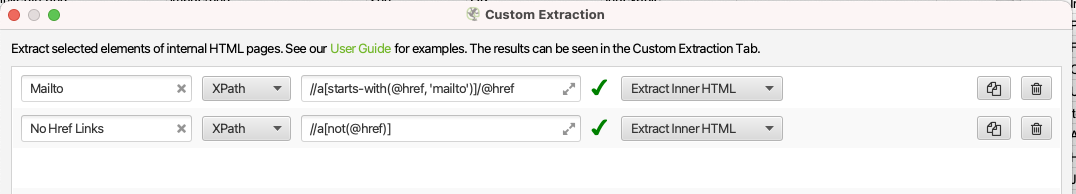

Custom Extraction

When I was working on that AEM project, IT built a component that, under certain circumstances, created an empty link — the tag looked like this <a>Something, something, etc</a>. It wasn’t caught during testing or approval phases and it got in the wild and became a problem. The IT team said they couldn’t find all of the places it occurred, so I used the “No Href Links” line above to find them and fix them in an hour.

We also had a lot of issues with AEM Authors not creating “mailto:” and “tel:” links, where appropriate. The “mailto” issue becomes obvious as a 404 page because the links would end up looking like:

https://www.website.com/event/email@example.com

But I added a custom extraction for it, and the “tel:” for clicking the link on a mobile browser and having it pop up a telephone number.

404 Bonanza We absorbed a set of AEM pages into our team about halfway through my tenure in the position and it was quickly clear that they had tons of 404 errors. Management asked us to fix them but with a low priority. A coworker and I took the list — probably a 1,000 URLs at the beginning, and we would go in and fix each one, little by little. In AEM, it could take a few weeks to do all of them working full time, but we just divvied up 25 or 50 per week and eventually we only had a few recently-introduced errors to deal with. I don’t think upper management ever really thought it was a problem, but it took up some support team time, and other resources and once they were all done those teams had a few hours extra a week, which was a small cost savings for the company.

All it all, it checked around 15k pages every week and helped keep the site clean.